The Master tier Journey 2: December 2017

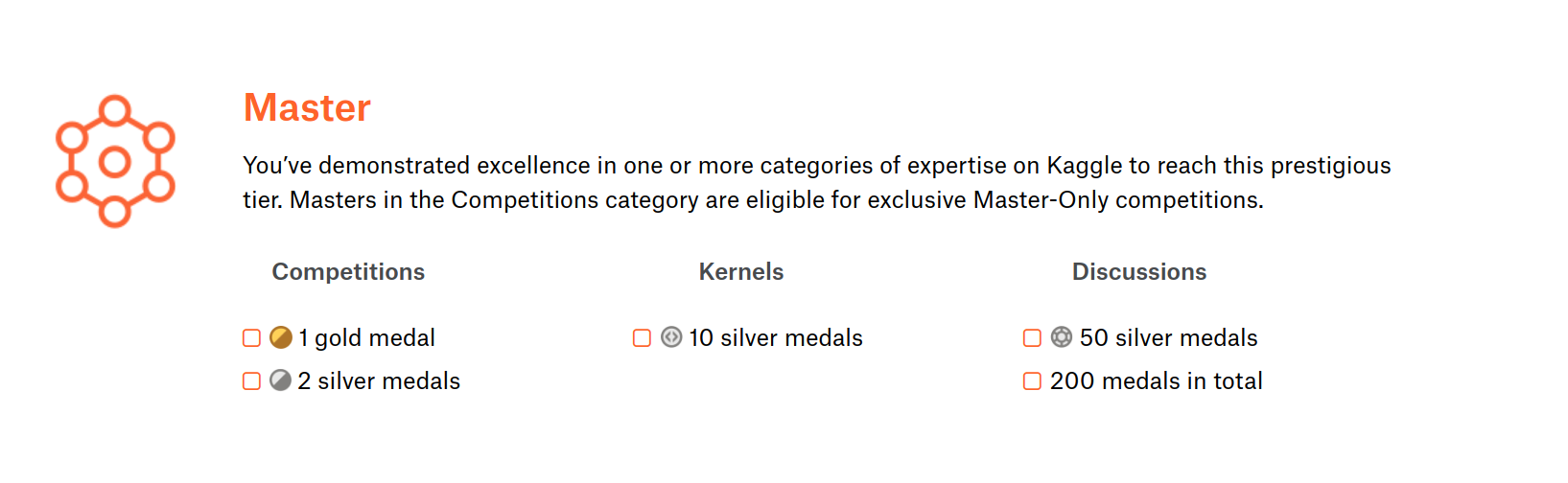

Kaggle Master tier requirements

This the third post in the Master Tier journey. In this post post, I will go through the different competitions I worked on and other related topics to my goal of getting to Kaggle Master tier.

How to win Data Science competitions online course

This was a no brainer thing to do when I saw the course title, instructors and the topics covered. I have posted in more details my feedback on taking this online course. The course gave me the much needed structure on how to work on data science competitions. I feel more confident on how to tackle data science problems instead of the random approach that I used before. This is also a humbling course when you learn how far top kagglers go to get top solutions. This course includes a kaggle competition that I participated in to implement the different methods that I learned. This is an In Class competition so it does not count towards the master tier but it’s a good learning experience to practice. Current standing is Top 11% (24/222).

Titanic: Machine Learning from Disaster

I revisited this competition after learning about mean target encoding in the online course and decided to implement it here. It’s an easy dataset so it was relatively easy to implement the mean target encoding. This led to a score improvement on the leaderboard which is always good.

Porto Seguro’s Safe Driver Prediction

I started this competition in November. The competition ended after posting my previous month update so I did not have the chance to post in detail about it. This is the first featured competition(featured means that it counts toward the master tier level) that I participate in. I felt kind of lost on where to start with the data. The reason for that is that the features in this competition are anonymized. Only their type is disclosed so no domain information can be extracted easily(I say easily because I have seen on the discussion forum that some kagglers tried to match features with company documents and forms from their website). I tried different models and done some feature engineering based on the work from other notebooks. I ended up in the Top 29% (1486/5169). Looking at the top solutions that were shared, I still have a long way to go which should be fun.

Things I learned:

- Mean target encoding

- Feature interactions

- Hyperparameter tuning

- Stacking

- Data types downcasting to save memory

Recruit Restaurant Visitor Forecasting

This is the current competition that I started to work on. The goal is to use reservation and visitation data from two systems to predict the total number of visitors to a restaurant for future dates. Final submission is on February 6th, there is plenty of time to work on it and improve my standing.

Goal Progress:

- 0/2 Bronze medals

- 0/2 Silver medals

- 0/1 Gold medals

- Porto Seguro: Top 29% (1486/5169)

Blog and Medium posts

This is the highlight of this month. I managed to get my blog live on Github pages. The goal of this blog is to document the projects that I work on. I am planning to write blog posts on topics that I learn and find cool related to machine learning and AI. I am also posting these blog posts on Medium.

This month stats:

- Blog and Medium posts: 6

- Medium stats: 83 views - 45 reads - 13 recommendations

Happy new year everyone!

These posts are created entirely for my own personal progress tracking. If you are reading this, thank you and I hope you like it. If you have any thoughts, advice on this post, please reach out to me on my email.

Till next time!